A Niche Content Creator

Transforming a 20-Year Archive into an Intelligent AI Assistant

Maximum Labs partnered with an expert content creator to transform their 20-year archive of specialized numerology articles into an interactive AI assistant. We provided an end-to-end solution, from defining use cases and data strategy to building a full-stack RAG (Retrieval Augmented Generation) prototype and providing comprehensive documentation for cloud deployment on AWS.

Use Case

Custom RAG & AI Assistant Development

Industry

Publishing & Digital Content

Team Size

2

Timeline

2 months

The Challenge

Our client, a leading expert in their field, had amassed two decades of valuable, proprietary articles. This rich knowledge base was difficult for their audience to search and engage with in a meaningful way. The client had a vision to create an AI-powered system that could answer user questions based on their unique content, but as a non-technical founder, they didn't know anything about AI or the engineering required to build such a solution.

Project Objectives

The primary goal was to create a working, intelligent prototype that could serve as the foundation for a new product offering. The key objectives were:

Define and score key use cases for an AI assistant based on the existing content.

Develop a robust methodology for ingesting, processing, and embedding the 20-year archive of articles.

Build a full-stack RAG prototype that could accurately answer user queries using only the provided documents as a source.

Create comprehensive documentation on how to implement the solution within an AWS infrastructure, process new documents, and evaluate the quality of user query responses.

Our Solution

Our engagement began with a deep-dive strategy session to define and prioritize potential use cases for the AI assistant. We then developed a custom data processing pipeline to handle the client's unique archive of documents.

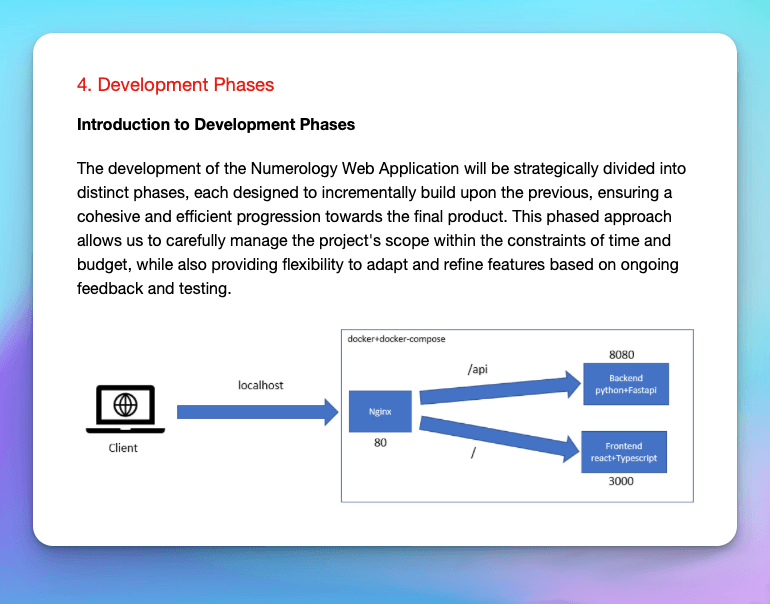

The core of our technical solution was a custom-built RAG system. This involved setting up a vector database to store the embedded content and engineering a sophisticated retrieval and generation pipeline that used a Large Language Model (LLM) to provide accurate, context-aware answers based solely on the client's articles.

To demonstrate the system's capabilities, we built a full-stack prototype application that allowed for real-time interaction with the AI assistant.

Crucially, we delivered extensive documentation covering every aspect of the project. This included a guide on how to deploy and manage the application on AWS, a step-by-step process for adding new documents to the knowledge base, and a detailed methodology for scoring and evaluating the quality of the AI's responses to user queries, empowering the client with full control over their new platform.

Ready to Build Your Next Success Story?

Let's discuss how our expert team can apply a tailored, results-driven approach to your unique challenges. Schedule a consultation to get started.