Internal projects fail more often due to misalignment with business outcomes + operations.

Alright, we have all been reading the takes. I hear the same line in hallways and comment threads: “AI is just another bubble bleeding money. Big companies are spending billions on the next big thing, and it is all smoke-and-mirror prototypes.” This is not our first rodeo. Back in the 2010s I helped ship more than a few backroom demos for CES at Gracenote while machine learning was still hunting for market fit. Same energy then as now. It is easy to sell an idea. Matching use cases to reality is the hard part.

Since then, we actually learned how to execute ML projects. We got cycles of wins and losses, and a body of practice emerged. Books, articles, practitioners. The best advice is boring and correct: check whether the data maps to the business imperatives before you light the money on fire.

So here is the deal: headlines say the “AI bubble” is about to burst. The tech is not failing. Execution is. And yes, we have seen this pattern before, and I am old enough to say it out loud. A widely cited MIT/NANDA analysis argues that despite tens of billions poured into GenAI, about 95% of organizations see no P&L impact, with only a small minority extracting real value. The more you look at the misses, the clearer the pattern: pilots never anchor to business outcomes or actual workflows, so they stall before production. ‡MLQ[1]. In a world built for doomscrolling, this should trigger questions, not performative conclusions.

We have seen this movie more than once:

Dot-com era: having a website was not strategy. Integrating ecommerce with supply chain and finance was.

Big data wave: companies hired PhDs before defining the business question. Dashboards multiplied. Decisions did not.

GenAI is the same plot with new costumes. People confuse a tool with a strategy. This guide is how to avoid the 95% and build systems that actually move your P&L. ‡Boston Consulting Group[2]. I cannot tell you how many meetings I have sat through where no one cites real data, and the only topic is a shiny model and wishful outcomes.The Data Lake Fallacy: Centralize everything and value will appear. Reality check: analysts like Gartner reported a huge share of these lakes delivered no ROI and turned into swamps because governance and use cases were an afterthought. I helped write a book[8] on why this keeps happening.

The MLOps Last Mile: We all became notebook artists. Then half the orgs could not get a single model into production. The algorithm was not the problem. The missing piece was deployment, monitoring, and a plan for scale. Something that works in a lab still needs to be built by the larger organization and observed for real value over time.

It took me exactly 52 interviews to land my first engineering job during this extremely tough time.

It took me exactly 52 interviews to land my first engineering job during this extremely tough time.

The bubble is not AI. It is pilots with no outcomes, no ops fit, and no success criteria. Do not spend until you know how you will win.

2) What the latest research actually says (and what it does not)

Key data points. A preliminary “State of AI in Business 2025” deck tied to MIT’s NANDA initiative claims roughly 95% of organizations reviewed received zero return from GenAI initiatives, and only about 5% of integrated pilots delivered tangible value. It also notes that vendor or specialist partnerships tend to perform about twice as well as purely internal builds, which tracks with faster integration and clearer scoping from teams that have scars. ‡MLQ[1]

Method notes. The deck references a mixed-methods approach that combines a review of many public initiatives with interviews and leader surveys. Treat the figures as directional. Useful, not holy. ‡MLQ[1]

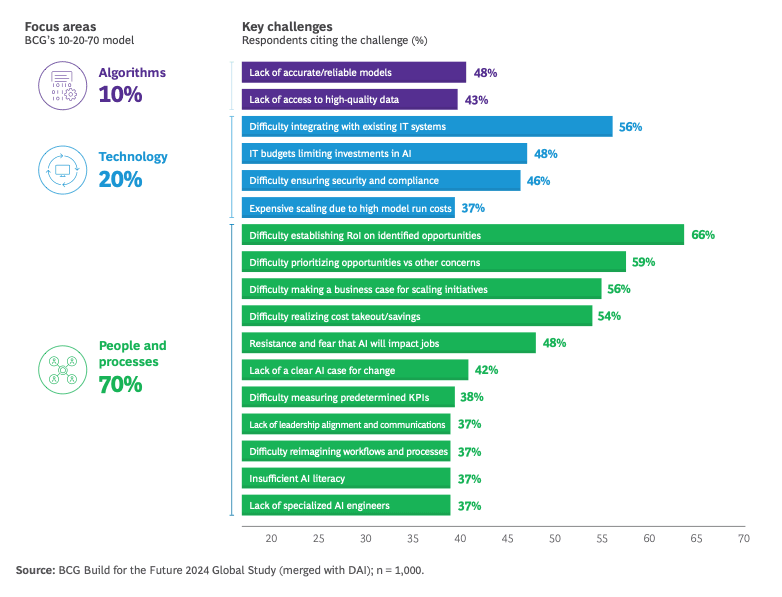

Triangulation. BCG has been saying a similar thing. Only a small slice of companies realize substantial AI value. The winners redesign workflows, get leadership out front, and build strong data and platform foundations. Translation: execution. ‡Boston Consulting Group[2]

Caveat. Some analysts push back on the MIT/NANDA framing. A smart move is to anchor on the direction, not the exact number. Many pilots stall. A minority create real impact. Execution explains most of the variance. ‡Futuriom[3]

Large firms have also shifted their message. The tech sets the ceiling, but the “how” creates the outcome. It is the same movie with a different cast.

Bottom line. Technology is not the bottleneck. Org design, workflow integration, incentives, and MLOps are.

3) The execution gap: 7 failure patterns

Let us talk about where things actually crack. No drama, just patterns I keep seeing.

Outcome blur

Who owns the P&L impact? If you cannot name a single person, you do not have a project. We watched a team ship a slick “notes summarizer.” Sales shrugged. They needed real-time objection extraction, not a wall of prose.Workflow mismatch

Does the output fit the way people work today? One insurance pilot improved accuracy on paper. Adjusters ditched it by week two because it added clicks and offered no escalation path for edge cases.Data readiness denial

You want a ‘customer 360’ RAG. Nice. Try doing that with five CRMs, four ID schemes, no golden customer record, and no evaluation set of real questions with expected answers. The project froze at ingestion. Predictably.Tool-first thinking

Buying platforms before defining a job to be done is expensive cosplay. A bank bought two GenAI suites. Nine months later, still nothing in production because procurement moved faster than product.MLOps as an afterthought

Models do not fail quietly. Latency slid from 800 ms to six seconds under load. There was no autoscaling and no rollback. Trust evaporated and the pilot went into the drawer.Change friction

Incentives drive behavior. One group kept manual QA because bonuses were tied to the old steps that AI removed. No surprise the “new” system looked optional.Build-only bias

Some teams try to shoulder everything in-house. Then a year goes by in “pilot.” We inherited one of those and shipped a narrower production version in six weeks by bringing in the right specialists.

Most AI failures are org failures wearing a model-shaped hat.

4) A pragmatic playbook to cross the chasm

Step 1 — Outcome Lock-In (Non-Negotiable)

Before you write code, name the P&L lever you intend to move. Revenue, margin, cycle time, or risk. Assign one accountable executive owner. Define success criteria and kill criteria up front. Lock an evaluation harness before the build: a golden dataset, acceptance thresholds that matter to the business (for example, factual accuracy above 85 percent and harmful responses below 2 percent), latency SLOs, and a cost-per-request budget.

Example: a logistics company targeted faster freight invoice processing. The VP of Finance owned the outcome. The goal was a 40 percent cut in manual processing time with 98 percent extraction accuracy. If the prototype did not beat 10 percent improvement after the first sprint, the project would stop. Stanford’s 2024 AI Index has been banging this drum for a while, and they are right. Source: Stanford University HAI[4]

Step 2 — Use-Case Scoring

Score candidates on business value, data readiness, operational fit, and governance risk. Do not boil the ocean. A software company weighed two options: a big-bang chatbot for all new customer questions (high value, low data readiness) or a summarizer for Tier 2 support tickets (moderate value, high data readiness). They picked the summarizer. Faster proof. Lower risk. Better signal.

Step 3 — Thin-Slice Sprint (4–6 Weeks)

Ship one testable slice tied to the outcome metric. Not a sprawling pilot. A focused experiment. Build with the evaluation harness and guardrails from day one. That includes prompt tests, safety checks, and PII handling. Deliver a demoable artifact and a one-page readout: baseline, measured lift, and the instrumentation plan. For the ticket summarizer, the four-week sprint produced a simple API. Feed it a ticket ID, get a three-bullet summary. The team ran 1,000 tickets through the harness and showed threshold hits and an average of 90 seconds saved per ticket.

Step 4 — Productionization Plan

Design the operating loop before you scale. Map data handoffs, human-in-the-loop escalation, permissions, observability, SLOs and SLAs, rollback, and audit trails. Treat MLOps as a first-class concern, not a retrofit. That means CI and CD for models, drift detection, traceability, and sane cost budgets. The ticket summarizer had a “thumbs up or down” control for agents. Downvotes were logged automatically to surface drift. No committee meeting required. Source: Google Cloud[5]

Step 5 — Change Management

Adoption beats demos, every time. Update SOPs, train owners, and line up incentives with the new workflow. MIT Sloan has been clear on this for years. The blockers are organizational and cultural more than technical. For the summarizer, the support team added a simple metric, “tickets resolved using the AI summary,” and the team lead opened each weekly meeting with one specific win. Source: MIT Sloan Management Review[6]

Step 6 — Partner Where Friction Peaks

Be honest about where your team needs help. Bring in specialists for evaluation design, retrieval architecture, or MLOps hardening when time-to-value matters. Gartner’s work on AI adoption lines up with this. One retailer had a solid recommendation model but could not deploy real-time inference at scale. An MLOps partner architected the serving stack and cut the go-live from six months to eight weeks. Source: Gartner[7]

5) Build vs Buy vs Partner

This is not just a technical choice. It sets your speed, cost, and differentiation. Get it right.

AI Solution Strategy: Buy vs. Partner vs. Build

This table covers a simple decision framework so you can pick the path that fits your reality.

| Factor | BUY 🛒 | PARTNER 🤝 | BUILD ⚙️ |

|---|---|---|---|

| Best For | • Commoditized problems • When compliance permits • Rapid rollout with predictable cost |

• When speed is critical • Expertise or capacity is a bottleneck • Help is needed with evals, MLOps, or integration |

• High operational fit and data readiness • Controlling IP is crucial • Engineering for cost and performance at scale |

| Strategic Alignment | Fastest time to value Lowest internal effort Lowest customization |

Balanced time to value Shared internal and external effort Flexible customization |

Slowest time to value Highest internal effort Highest control and customization |

BUILD (the control path): Use this when your operational fit is high, your data is ready, and the capability is core to your IP. You get maximum control over cost and performance at scale. It is slow and resource intensive. Save it for crown jewels, not experiments.

BUY (the speed path): If the problem is commoditized and compliance allows, buying gives you the fastest path and predictable cost. Great for transcription, basic bots, or anything that will not differentiate you.

PARTNER (the accelerator path): Use this when you need speed and the problem still requires integration with your unique workflows and data. The right specialist removes the bottlenecks, whether that is evaluation, MLOps, or complex integration. You move faster without losing the shape of your solution.

References & Notes

- MIT / Project NANDA (2025) — “State of AI in Business 2025” (deck). Directional claim that ~95% of organizations see zero ROI; ~5% extract value; partnerships ≈2× success vs internal builds. Preliminary; treat as directional. https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf

- Boston Consulting Group (2024–2025) — “Where’s the Value in AI?” Only a small fraction realize substantial AI value; winners redesign workflows, engage leadership, and build strong data/tech foundations. https://www.bcg.com/publications/2024/wheres-value-in-ai

- Futuriom (2025) — “Why We Don’t Believe MIT-NANDA’s Weird AI Study.” Critique of methodology and framing; useful as a caveat. https://www.futuriom.com/articles/news/why-we-dont-believe-mit-nandas-werid-ai-study/2025/08

- Stanford HAI (2024) — AI Index Report 2024, Chapter 6 (Industry). Grounding initiatives in measurable impact. https://aiindex.stanford.edu/wp-content/uploads/2024/04/HAI_AI-Index-Report-2024_Chapter-6.pdf

- Google Cloud — MLOps whitepaper. CI/CD for models, monitoring, drift detection, cost controls. https://cloud.google.com/resources/mlops-whitepaper

- MIT Sloan Management Review (2022) — “Winning with AI.” Organizational and cultural factors drive adoption. https://sloanreview.mit.edu/projects/winning-with-ai/2022

- Gartner — “3 Barriers to AI Adoption.” Partnerships and org readiness matter. https://www.gartner.com/en/articles/3-barriers-to-ai-adoption

- The Informed Company (book) — Data strategy, governance, and use-case alignment. https://theinformedcompany.com

Ship a board-ready roadmap

My shameless tie-in: this is the 4-week sprint where we interview your execs, audit the mess behind the dashboards, and hand back a board-ready action plan with ROI math you can defend.

Once you’ve got the roadmap, grab a Data-to-Value Sprint to prove the top bet or toss it to an Engineering & MLOps Pod to start shipping.